This blog post was inspired by my classmate who encountered pip’s dependencies hell when configuring machine learning python code. Yeah, for my academics-immersed amateur of industy, environment configuration and package managers are very unfamiliar things. It’s not strange to crash into hell.

Sometimes it’s simply impossible to find a combination of package versions that do not conflict. Welcome to dependency hell.

The era without package managers ¶

There was no so-called package manager for the early era programming languages. This can be seen from C and/or C++ (although for C family it is largely because C family programmers like to reinvent the wheel, squeezing out the best performance). In this primitive period if you need to include something you must either use compiled dynamic link libraries or directly copy-paste the code into your own source code dir.

This method is obviously flawed. It is difficult to manage the versions of dependencies, lacking security updates, and the installation is cumbersome and prone to errors, etc. For this reason, package managers came into being.

Early era package managers ¶

Early package managers resolve dependencies and install packages in a certain location. This is based on the usual virtue of saving disk space. If two dependencies A and B both depend on a C, then we can obviously install only one copy of C for both A and B to use. Furthermore, if I have two projects that both depend on A, then putting A directly in the public directory e.g., python’s ~/.local/lib/python3.12/site-packages is also a good choice.

This has been the advantage of Linux for a long time. Due to historical reasons, Windows has long lacked a system built-in package manager and a unified installation location. Although it is still possible to reuse common libraries with dll, it is normaly time-consuming and laborious. Therefore, Windows software tends to prepare all the dlls it needs in its own directory, or even bundle them directly in the exe files. This makes Windows programs often larger than Linux programs.

But the virtue leads to a big problems, the conflicting dependencies.

This dependency issue arises when several packages have dependencies on the same shared packages or libraries, but they depend on different and incompatible versions of the shared packages. Solving the dependencies for one software may break the compatibility of another in a similar fashion to whack-a-mole. If app1 depends on libfoo 1.2, and app2 depends on libfoo 1.3, and different versions of libfoo cannot be simultaneously installed, then app1 and app2 cannot simultaneously be used. [1]

Unfortunately, many early era package managers simply could not install different versions of a library at the same time. What’s even more terrifying is that pip, package manager of python the most popular language nowadays is among them.

In order to solve this problem, the package manager of programming languages has roughly derived several approaches. Here we introduce nodejs, docker and nix.

Package management of Node.js ¶

In order to solve the coflicting dependencies hell, npm, the default package manager of nodejs, chose to placed dependencies in a fixed folder node_modules of the project from the beginning. Each dependency has its own node_modules, so that dependencies do not interfere with each other.

For example if we want to add two dependencies A and B, but A depends to C 1.0.0, and B depends to C 2.0.0. Then the project’s node_modules will probably look like this:

/node_modules

/node_modules/A

/node_modules/B

/node_modules/...

/node_modules/A/node_modules/C@1.0.0

/node_modules/A/node_modules/... (other dependencies)

/node_modules/B/node_modules/C@2.0.0

/node_modules/B/node_modules/...

graph TD

Project --> nm1[node_modules]

nm1 --> A

nm1 --> B

A --> nmA[node_modules]

B --> nmB[node_modules]

nmA --> c1[C 1.0.0]

nmB --> c2[C 2.0.0]

This effectively solves the problem of conflicts between sub-dependencies.

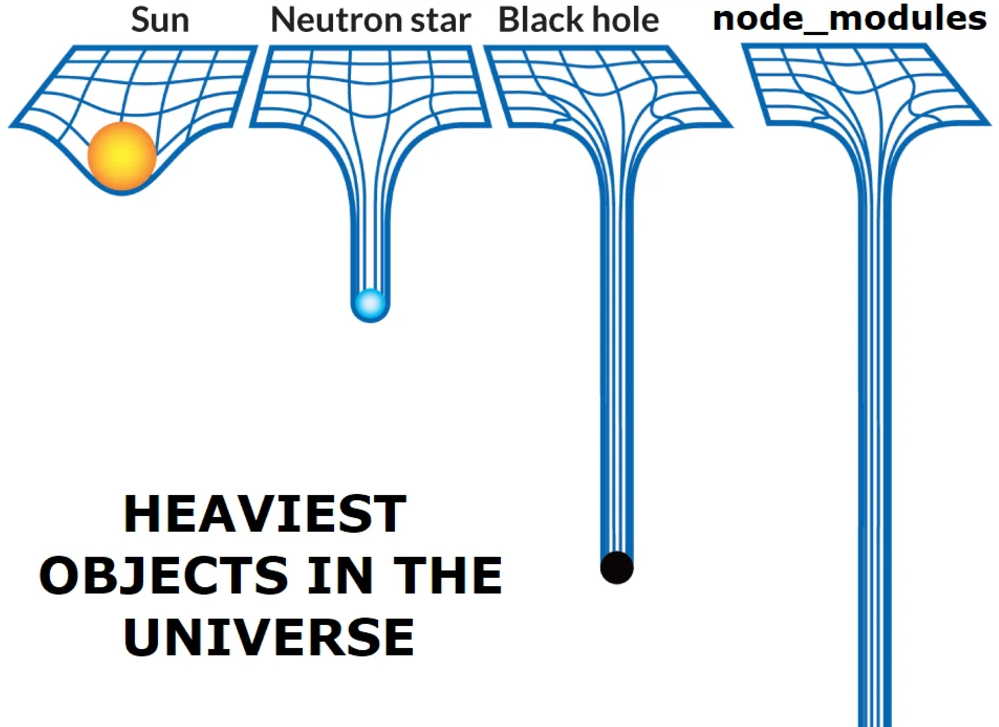

However, npm’s approach also causes other problems.[2] One problem comes from a design flaw in the file system. Some systems, such as older versions of Windows, cannot handle path names longer than 255 characters. However, a path like package_name/node_modules often reaches 20 characters or more, causing problems with less than 10 levels of recursive dependencies. npm’s approach also leads to a huge number of duplicate dependencies in the deep recursive dependency tree that people joke that node_modules is heavier than a black hole.

To solve these problems, the currently popular nodejs package manager, pnpm, uses some clever ideas. pnpm will install the package locally shared, defaultly in ~/.pnpm-store. Instead of storing complete dependencies in each project’s node_modules, it will create symbolic links (symlinks) point to shared dependencies in the global store. At the same time, pnpm will flatten the dependencies, so dependency chains like A -> B -> C -> D will be flattened into node_modules in the root directory.

npm:

node_modules/A/node_modules/B/node_modules/C/node_modules/D/...

pnpm:

node_modues/A

node_modues/B

node_modues/C

node_modues/D

Also, pnpm use a strict dependency tree structure pnpm-lock.yaml to ensure that each package can only access its declared direct dependencies to prevent accidental access. (i.e, You didn’t directly include D then you won’t access D by A -> B -> C -> D chain)

Through these technologies, pnpm saves disk space and solves the problem of dependency hell to a large extent.

Virtual Environment of Python ¶

Languages like Python choose to use virtual environments (venv) instead of improving pip’s dependencies resolution architecture.

The

venvmodule supports creating lightweight “virtual environments”, each with their own independent set of Python packages installed in theirsitedirectories. A virtual environment is created on top of an existing Python installation, known as the virtual environment’s “base” Python, and may optionally be isolated from the packages in the base environment, so only those explicitly installed in the virtual environment are available. [3]

In short, venv creates an independent, virtual runtime environment instead of using the common site-packages. In this way, projects using different virtual environments will never interfere with each other, solving the dependency conflict problem between different projects.

Unfortunately, python venv does not solve the dependency conflicts between sub-dependencies. Usually, pythoneans need to manually solve dependency problems, such as boldly adjusting dependency versions, directly forking confilcted dependencies, or nesting another venv in some venv.

Docker, the last solution ¶

Instead of working hard on algorithmic improvements on the package manager, Docker took a different approach. Docker packages the application into a container and runs it in a Linux virtual environment. Programs inside and outside the container can communicate with each other like ordinary programs through methods such as IPC communication and sockets.

Packaging an application into a container will involves not only the application itself, but all dependencies into a portable image. We don’t need to worry about any dependency conflicts at all, because the inside of the docker container is more like an independent “lightweight virtual machine”, and the docker containers will not interfere with each other in any way other than the pre-planned interface. Dependency handling is something that the publisher of the docker image needs to worry about, and as a user, you can use the image just after getting it, becaues everything in it is already packaged.

Of course, Docker also has huge drawbacks. The most obvious one is its huge space and resource usage, which is although obviously much smaller than installing a real virtual machine, but larger than a well-designed package manager installation. but hard disk space is becoming less valuable nowadays. If Docker can save 8 hours of painful installation and conflicted dependencies resolving process, what does it matter if there are just 500MB larger of space?

Further: Nix ¶

Nix is a purely functional package manager. This means that it treats packages like values in purely functional programming languages such as Haskell — they are built by functions that don’t have side-effects, and they never change after they have been built. Nix stores packages in the Nix store, usually the directory /nix/store, where each package has its own unique subdirectory such as /nix/store/b6gvzjyb2pg0kjfwrjmg1vfhh54ad73z-firefox-33.1/ where b6gvzjyb2pg0... is a unique identifier for the package that captures all its dependencies (it’s a cryptographic hash of the package’s build dependency graph). This enables many powerful features.

You can have multiple versions or variants of a package installed at the same time. This is especially important when different applications have dependencies on different versions of the same package — it prevents the “DLL hell”. Because of the hashing scheme, different versions of a package end up in different paths in the Nix store, so they don’t interfere with each other.

An important consequence is that operations like upgrading or uninstalling an application cannot break other applications, since these operations never “destructively” update or delete files that are used by other packages.

This blog post is licensed under CC-BY-SA 4.0. Sources are cited throughout the footnotes.

These problems are generally solved in the new version (≥7) of npm ↩︎

venv — Creation of virtual environments — Python 3.13.0 documentation ↩︎